Scaling Lilt's Connectors with Argo Workflows

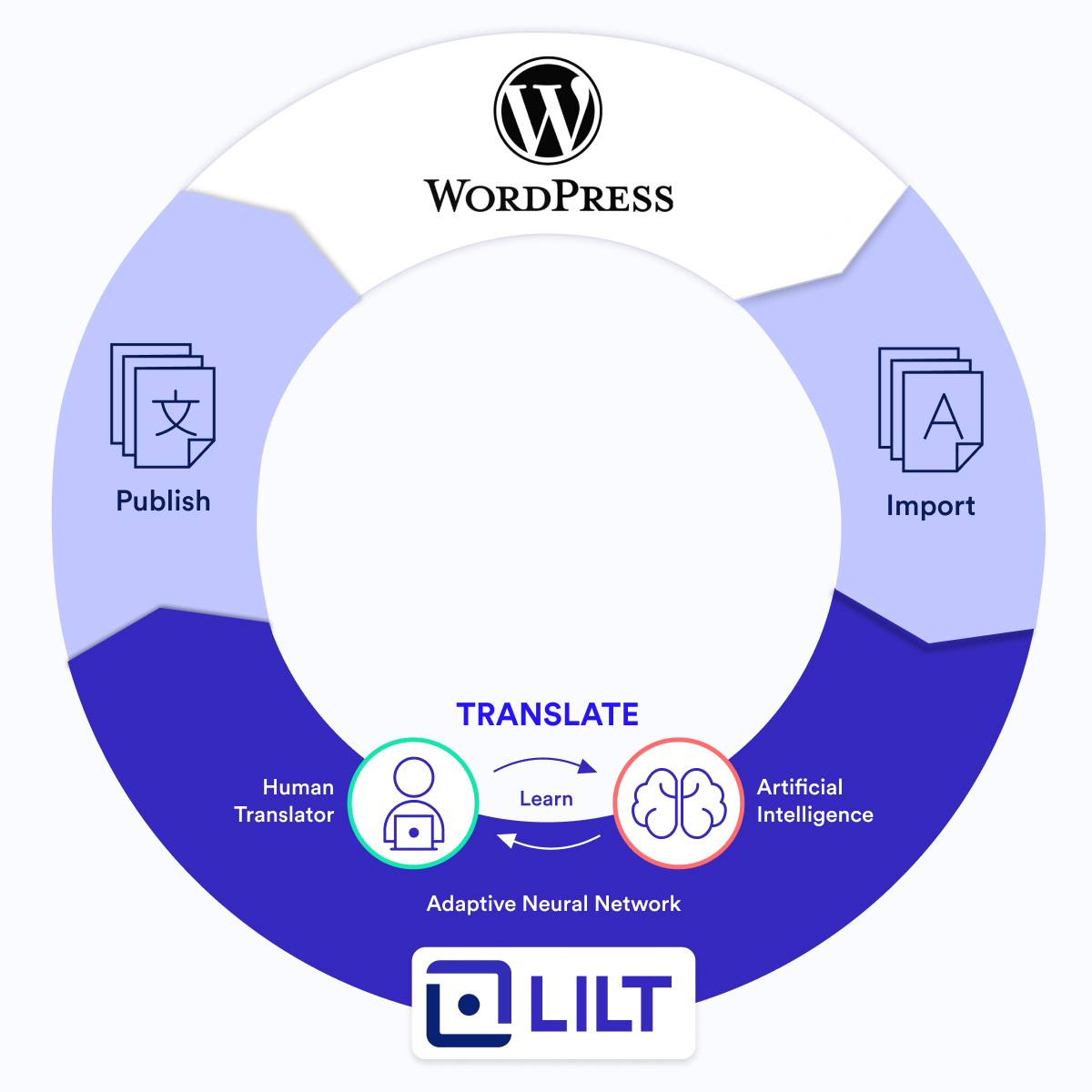

Lilt Connectors allow our customers to keep content on their preferred systems, like WordPress blog posts or Zendesk support wikis, and use Lilt behind-the-scenes for translation. Content is imported into Lilt and exported back to the original content system when translation is completed. Import and export are done seamlessly, and this efficiency plays a major role in helping Lilt make the world’s information more accessible.

Lilt currently provides connectors for over 15 different systems. Our customers rely heavily on Lilt connectors for their localization workflow. In 2021, nearly 90% of all the content translated on the Lilt platform was done via connector!

Our connector architecture wasn't always designed to support the incredible volume it currently handles. This article describes how Lilt uses Argo Workflows to scale our connectors framework, enabling it to become our largest enterprise offering today.

Our Early Days

In the nascent days of Lilt, each connector was implemented in its own Github repository, was developed by different engineers, and interacted with Lilt in nuanced ways using separate databases and schemas. Any changes to our API were accompanied by having to update the codebase in several places. On top of that, every connector came with a unique set of bugs, which were difficult to track and fix. Imagine being an engineer who had to maintain multiple connectors. Yikes!

-png.png)

💡 Fun Fact: Zendesk was our very first connector, and it was released in early 2019.

Unification of Connectors

-png.png)

It was evident that this model wouldn't scale as Lilt grew its connectors offering. So, we unified our connectors framework by first centralizing the code and configuring the connectors to run the same way regardless of the system being connected to. Once the code was in a single repository, we started using native Kubernetes Jobs to manage and execute all connector jobs.

This was a huge upgrade from our previous implementation and immensely more efficient. Then, a new kind of scaling problem emerged. Although Kubernetes itself was reliable, we eventually ran into resource constraints that caused reliability issues.

-png.png)

Scheduling

- Documents get imported to and exported from Lilt on a given schedule. We set a configuration for the management server to run the schedule, but Kubernetes frequently scheduled too many jobs at once (e.g. 50 in the same millisecond), depleting cluster memory and causing jobs to be dropped and lost.

- New jobs were stalled and couldn't be submitted due to a lack of memory.

Logging

- We run Kubernetes on Google Kubernetes Engine (GKE) and configured it to output application logs in Stackdriver, but the logs were disorganized and confusing.

- Manual filtering of logs in Stackdriver was time-consuming and cumbersome but required to determine the cause of failed jobs.

Job Clean-up

- Kubernetes wasn't optimized for garbage collection. We were responsible for the deletion of completed jobs and pods and had to implement separate code for clean-up.

Facing these issues, we found ourselves in the same predicament as our earlier days — we needed a better solution, or we wouldn't scale!

Enter Argo

While evaluating solutions to revamp our connectors architecture, we considered Airflow and Prefect, but neither supported Kubernetes natively. Ultimately, we wanted to use something on top of Kubernetes, and Argo Workflows performs container-based job orchestration management for Kubernetes. In addition, it solved our scaling pain via these features:

Log visibility and accessibility

- Argo collects more information on each execution than Stackdriver, enabling us to debug job failures quicker.

- Out of the box, Argo supports saving logs. All we had to do was specify where to save them.

- Argo keeps a record of our historical workflows, giving us even more logs and visibility.

Reliable job management

- Argo allows us to define parallelism. If we have 100 jobs, Argo will perform 5 at a time and only execute a job once the prior one is complete, which greatly improves reliability and eliminates the scheduling issues caused by using Kubernetes Jobs on its own.

- Argo uses cron workflows in conjunction with Kubernetes to schedule and execute jobs based on the resources required. Our scheduler is run directly through Argo.

- Scaling is effortless — when volume increases, we simply need to crank up the Kubernetes resources in Google Cloud Platform (GCP) for Argo to utilize them.

- Argo has garbage collection options to manage completed jobs.

Seamless integration

- Argo is the "workflow engine for Kubernetes", meaning we wouldn't have to switch container orchestration tools.

- Integration into our architecture didn't require any code changes, allowing us to continue executing the code from our centralized repository.

- Setup requirements were minimal — we only needed to specify the Argo workflow configuration in a YAML file.

Ergo, Argo

-png.png)

After adopting Argo, our connectors services now consist of three main components:

- Management server

- Execution server

- Job execution

Management Server

The connectors-admin panel is the UI to the management server and provides an interface for users to perform tasks. The management server connects to our database and supports CRUD operations for configurations and jobs, such as creating/updating connector configurations, and monitoring the progress and log output of running jobs — this can all be accessed by users through the connectors-admin panel.

Execution Server

Argo is the execution server that's connected to a local Kubernetes cluster. With our upgraded architecture, jobs are submitted from the management server directly to Argo instead of Kubernetes. All interactions with Argo from the management server are done via Argo’s REST API.

-png.png)

How It Works

- The management server submits jobs to Argo.

- Argo handles the scheduling of the workflow and ensures that the job completes. Our scheduler runs every 5-15 minutes and checks for new jobs to import or export.

- When a workflow is completed, Argo removes pods and resources.

- Argo stores completed workflow information in its own database and saves the pod logs to Google Cloud Storage. The pod logs can be retrieved and viewed by users on demand through the connectors-admin panel.

Job Execution

While each connector is different, they are all executed in the same way. For customers with unique requirements in their localization workflow, we can use our connector-admin panel to control how their content gets processed when jobs are executed and content is transferred to or from the Lilt platform.

Exeunt Argo

We implemented Argo Workflows at the start of 2021, and the adoption has scaled our software to support a staggering surge in the volume we handle today. Post Argo, the number of documents imported to Lilt through a connector has increased by over 870%. We expect that number to climb as we continue to integrate Argo into our connectors framework.