Measuring and Comparing Machine Translation Quality

In the last few decades, machine translation has become more and more common as a tool to improve speed and reduce cost for companies scaling localization programs. The process itself has helped to make large amounts of the world’s content available for people outside of the target language audience.

But machine translation is more than just a simple process that applies equally to all scenarios. There are multiple types of machine translation systems, different approaches to its use, and various statistics and ways to measure its success.

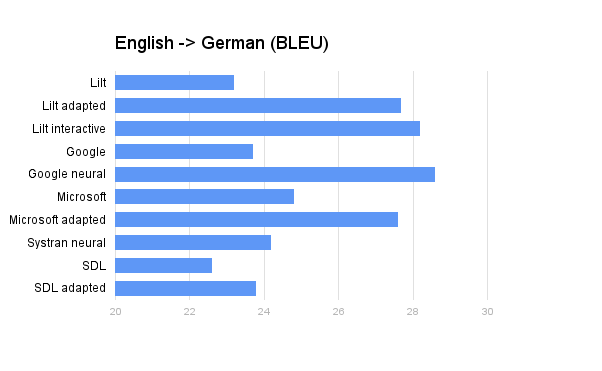

One of the most common ways to measure machine translation output is using Automatic Evaluation with Bilingual Evaluation Understudy, also known as the BLEU Score. This historical standard compares raw machine translation output to a human translation - the higher the score, the closer the two are to one another. Using the BLEU Score with a little research and engineering, it’s possible to compare popular machine translation systems and see how they score.

In our whitepaper Measuring and Comparing Machine Translation Quality, we break down the details to help you better understand the various types of machine translation, how to measure quality, and see a direct comparison between Google, Microsoft, and Lilt’s various machine translation systems to see how they stack up.

• • •

Click here to download the whitepaper, and contact us to learn more about how Lilt’s language services can help you cover your localization needs.